As we get ready for Celebrating Selenium's 10 year journey in vodQA Hyderabad, ThoughtWorks Chennai is ready to take vodQA to the next level on Saturday, 21st February, 2015 with an interesting Cocktail of topics related to Software Testing.

Register here as a speaker for vodQA Chennai, or here as an attendee.

Wednesday, January 28, 2015

Thursday, January 15, 2015

Reading does help bring about fearless change ...

[Update] The link to the article was wrong :( Corrected it now

I have shared my experience on How I Turned My Idea Into A Product on ThoughtWorks Insights. This proves (to me) that reading does help.

I have shared my experience on How I Turned My Idea Into A Product on ThoughtWorks Insights. This proves (to me) that reading does help.

Labels:

automation,

change,

collaboration,

feedback,

influence,

innovation,

learning,

lindarising,

opensource,

patterns,

pune,

punedashboard,

pyramid,

testing,

thoughtworks,

tta,

visualization

Tuesday, January 13, 2015

Start 2015 by Celebrating Selenium in vodQA Hyderabad

ThoughtWorks, Hyderabad is proud to host its first vodQA, also the first vodQA of 2015 and start 10 Years of Selenium Celebration. This event will be held on Saturday, 31st Jan 2015.

Look at the agenda of this vodQA and register soon. Given that we have mostly workshops in this vodQA, seats are going to be limited!

Here is the address and direction to the ThoughtWorks office.

UPDATE:

Slides for my talk on the "Future of Testing, Test Automation and the Quality Analyst" are now available here:

Look at the agenda of this vodQA and register soon. Given that we have mostly workshops in this vodQA, seats are going to be limited!

Here is the address and direction to the ThoughtWorks office.

UPDATE:

Slides for my talk on the "Future of Testing, Test Automation and the Quality Analyst" are now available here:

Labels:

#vodqa,

automation,

automation_framework,

conference,

design_pattern,

hyderabad,

learning,

meetup,

opensource,

patterns,

selenium,

testing,

testing_conference,

thoughtworks,

vodQA,

vodQAHyd

Wednesday, December 24, 2014

Disruptive Testing with Julian Harty

As part of the Disruptive Testing series, the last interview of 2014, with Julian Harty is now available here (http://www.thoughtworks.com/insights/blog/disruptive-testing-part-8-julian-harty) as a video interview. The transcript of the same is also published.

Also look at ThoughtWorks Insights for other great articles on a variety of topics and themes.

Also look at ThoughtWorks Insights for other great articles on a variety of topics and themes.

Wednesday, December 17, 2014

Testing in the Medical domain

I had the opportunity recently to do some testing, though for a very short time, in the Medical domain - something that I have always aspired to. I learnt a lot in this time and have gained a lot of appreciation for people working in such mission-critical domains.

Some of these experiences have been published here as "A Humbling Experience in Oncology Treatment Testing" on ThoughtWorks Insight. Looking forward for your comments and feedback on the same.

Some of these experiences have been published here as "A Humbling Experience in Oncology Treatment Testing" on ThoughtWorks Insight. Looking forward for your comments and feedback on the same.

Saturday, November 22, 2014

To Deploy or Not to Deploy - decide using Test Trend Analyzer (TTA) in AgilePune 2014

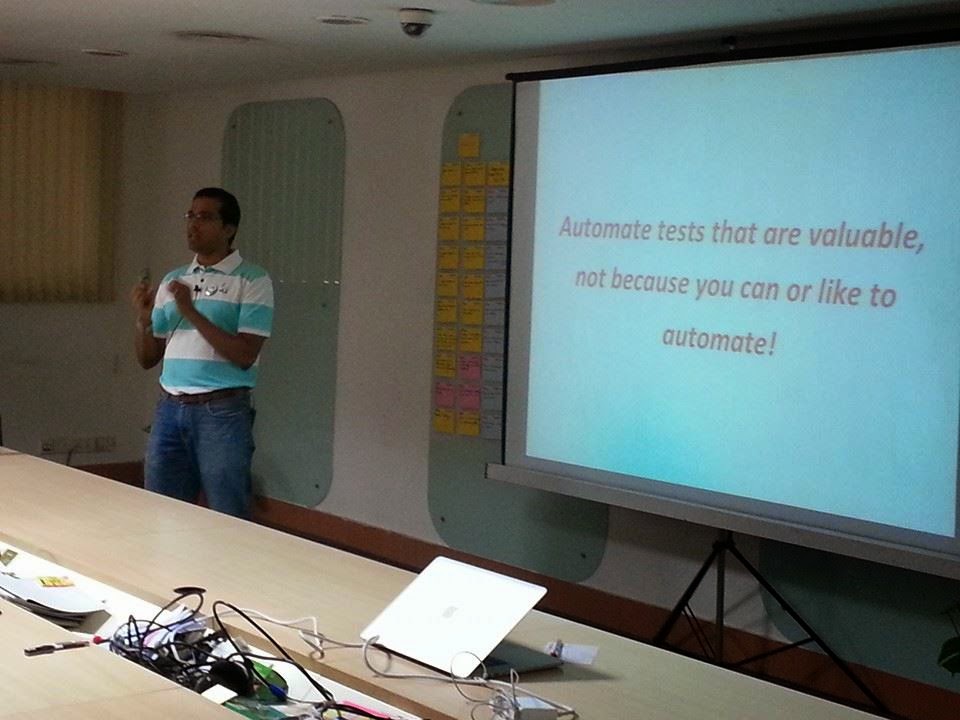

I spoke on the topic - "To Deploy or Not to Deploy - decide using Test Trend Analyzer (TTA)" in Agile Pune, 2014.

The slides from the talk are available here, and the video is available here.

Below is some information about the content.

The key objectives of organizations is to provide / derive value from the products / services they offer. To achieve this, they need to be able to deliver their offerings in the quickest time possible, and of good quality!

In order for these organizations to to understand the quality / health of their products at a quick glance, typically a team of people scramble to collate and collect the information manually needed to get a sense of quality about the products they support. All this is done manually.

So in the fast moving environment, where CI (Continuous Integration) and CD (Continuous Delivery) are now a necessity and not a luxury, how can teams take decisions if the product is ready to be deployed to the next environment or not?

Test Automation across all layers of the Test Pyramid is one of the first building blocks to ensure the team gets quick feedback into the health of the product-under-test.

The next set of questions are:

The solution - TTA - Test Trend Analyzer

TTA is an open source product that becomes the source of information to give you real-time and visual insights into the health of the product portfolio using the Test Automation results, in form of Trends, Comparative Analysis, Failure Analysis and Functional Performance Benchmarking. This allows teams to take decisions on the product deployment to the next level using actual data points, instead of 'gut-feel' based decisions.

There are 2 sets of audience who will benefit from TTA:

1. Management - who want to know in real time what is the latest state of test execution trends across their product portfolios / projects. Also, they can use the data represented in the trend analysis views to make more informed decisions on which products / projects they need to focus more or less. Views like Test Pyramid View, Comparative Analysis help looking at results over a period of time, and using that as a data point to identify trends.

2. Team Members (developers / testers) - who want to do quick test failure analysis to get to the root cause analysis as quickly as possible. Some of the views - like Compare Runs, Failure Analysis, Test Execution Trend help the team on a day-to-day basis.

NOTE: TTA does not claim to give answers to the potential problems. It gives a visual representation of test execution results in different formats which allow team members / management to have more focussed conversations based on data points.

Some pictures from the talk ... (Thanks to Shirish)

The slides from the talk are available here, and the video is available here.

Below is some information about the content.

The key objectives of organizations is to provide / derive value from the products / services they offer. To achieve this, they need to be able to deliver their offerings in the quickest time possible, and of good quality!

In order for these organizations to to understand the quality / health of their products at a quick glance, typically a team of people scramble to collate and collect the information manually needed to get a sense of quality about the products they support. All this is done manually.

So in the fast moving environment, where CI (Continuous Integration) and CD (Continuous Delivery) are now a necessity and not a luxury, how can teams take decisions if the product is ready to be deployed to the next environment or not?

Test Automation across all layers of the Test Pyramid is one of the first building blocks to ensure the team gets quick feedback into the health of the product-under-test.

The next set of questions are:

- How can you collate this information in a meaningful fashion to determine - yes, my code is ready to be promoted from one environment to the next?

- How can you know if the product is ready to go 'live'?

- What is the health of you product portfolio at any point in time?

- Can you identify patterns and do quick analysis of the test results to help in root-cause-analysis for issues that have happened over a period of time in making better decisions to better the quality of your product(s)?

The solution - TTA - Test Trend Analyzer

TTA is an open source product that becomes the source of information to give you real-time and visual insights into the health of the product portfolio using the Test Automation results, in form of Trends, Comparative Analysis, Failure Analysis and Functional Performance Benchmarking. This allows teams to take decisions on the product deployment to the next level using actual data points, instead of 'gut-feel' based decisions.

There are 2 sets of audience who will benefit from TTA:

1. Management - who want to know in real time what is the latest state of test execution trends across their product portfolios / projects. Also, they can use the data represented in the trend analysis views to make more informed decisions on which products / projects they need to focus more or less. Views like Test Pyramid View, Comparative Analysis help looking at results over a period of time, and using that as a data point to identify trends.

2. Team Members (developers / testers) - who want to do quick test failure analysis to get to the root cause analysis as quickly as possible. Some of the views - like Compare Runs, Failure Analysis, Test Execution Trend help the team on a day-to-day basis.

NOTE: TTA does not claim to give answers to the potential problems. It gives a visual representation of test execution results in different formats which allow team members / management to have more focussed conversations based on data points.

Some pictures from the talk ... (Thanks to Shirish)

Labels:

agile,

agilepune2014,

automation,

collaboration,

conference,

feedback,

innovation,

opensource,

pune,

punedashboard,

pyramid,

ruby,

test_pyramid,

testing,

testing_conference,

thoughtworks,

tta,

visualization

Saturday, November 15, 2014

The decade of Selenium

Selenium has been around for over a decade now. ThoughtWorks has published an eBook on the occasion - titled - "Perspectives on Agile Software Testing". This eBook is available for free download.

I have written a chapter in the eBook - "Is Selenium Finely Aged Wine?"

An excerpt of this chapter is also published as a blog post on utest.com. You can find that here.

I have written a chapter in the eBook - "Is Selenium Finely Aged Wine?"

An excerpt of this chapter is also published as a blog post on utest.com. You can find that here.

Monday, November 10, 2014

Perspectives on Agile Software Testing

Inspired by Selenium's 10th Birthday Celebration, a bunch of ThoughtWorkers have compiled an anthology of essays on testing approaches, tools and culture by testers for testers.

This anthology of essays is available as an ebook, titled - "Perspectives on Agile Software Testing" which is now available for download from here on ThoughtWorks site. A simple registration, and you will be able to download the ebook.

Here are the contents of the ebook:

Enjoy the read, and looking forward for the feedback.

This anthology of essays is available as an ebook, titled - "Perspectives on Agile Software Testing" which is now available for download from here on ThoughtWorks site. A simple registration, and you will be able to download the ebook.

Here are the contents of the ebook:

Enjoy the read, and looking forward for the feedback.

Monday, October 13, 2014

vodQA - Breaking Boundaries in Pune

[UPDATE] - The event was a great success - despite the rain gods trying to dissuade participants to join in. For those who missed, or for those who want to revisit the talks you may have missed, the videos have been uploaded and available here on YouTube.

[UPDATE] - Latest count - >350 interested attendees for listening to speakers delivering 6 talks, 3 lightning talks and attending 3 workshops. Not to forget the fun and networking with a highly charged audience at the ThoughtWorks, Pune office. Be there, or be left out! :)

I am very happy to write that the next vodQA is scheduled in ThoughtWorks, Pune on 15th November 2014. The theme this time around is "Breaking Boundaries".

You can register as an attendee here, and register as a speaker here. You can submit more than one topic for speaker registration - just email vodqa-pune@thoughtworks.com with details on the topics.

I am very happy to write that the next vodQA is scheduled in ThoughtWorks, Pune on 15th November 2014. The theme this time around is "Breaking Boundaries".

You can register as an attendee here, and register as a speaker here. You can submit more than one topic for speaker registration - just email vodqa-pune@thoughtworks.com with details on the topics.

Sunday, September 7, 2014

Perils of Page-Object Pattern

I spoke at Selenium Conference (SeConf 2014) in Bangalore on 5th September, 2014 on "The Perils of Page-Object Pattern".

Page-Object pattern is very commonly used when implementing Automation frameworks. However, as the scale of the framework grows, there is a limitation on how much re-usability really happens. It inherently becomes very difficult to separate the test intent from the business domain.

I want to talk about this problem, and the solution I have been using - Business Layer - Page-Object pattern, which has helped me keep my code DRY.

The slides from the talk are available here. The video is available here.

Video taken by professional:

Video taken from my laptop:

Slides:

If you want to see other slides and videos from SeConf, see the SeConf schedule page.

Page-Object pattern is very commonly used when implementing Automation frameworks. However, as the scale of the framework grows, there is a limitation on how much re-usability really happens. It inherently becomes very difficult to separate the test intent from the business domain.

I want to talk about this problem, and the solution I have been using - Business Layer - Page-Object pattern, which has helped me keep my code DRY.

The slides from the talk are available here. The video is available here.

Video taken by professional:

Video taken from my laptop:

Slides:

If you want to see other slides and videos from SeConf, see the SeConf schedule page.

Labels:

automation,

automation_framework,

conference,

cucumber,

design_pattern,

domain,

innovation,

java,

mobile_testing,

patterns,

pyramid,

ruby,

seconf,

selenium,

test_pyramid,

testing,

testing_conference,

thoughtworks

Thursday, July 31, 2014

Enabling Continuous Delivery (CD) in Enterprises with Testing

I spoke about "Enabling Continuous Delivery (CD) in Enterprises with Testing" in Unicom's World Conference on Next Generation Testing.

I started this talk by stating that I am going to prove that "A Triangle = A Pentagon".

I am happy to say that I was able to prove that "A Triangle IS A Pentagon" - in fact, left reasonable doubt in the audience mind that "A Triangle CAN BE an n-dimensional Polygon".

Confused? How is this related to Continuous Delivery (CD), or Testing? See the slides and the video from the talk to know more.

This topic is also available on ThoughtWorks Insights.

Below are some pictures from the conference.

I started this talk by stating that I am going to prove that "A Triangle = A Pentagon".

|

| A Triangle == A Pentagon?? |

I am happy to say that I was able to prove that "A Triangle IS A Pentagon" - in fact, left reasonable doubt in the audience mind that "A Triangle CAN BE an n-dimensional Polygon".

Confused? How is this related to Continuous Delivery (CD), or Testing? See the slides and the video from the talk to know more.

This topic is also available on ThoughtWorks Insights.

Below are some pictures from the conference.

Labels:

agile,

automation,

automation_framework,

bangalore,

collaboration,

conference,

domain,

feedback,

innovation,

meetup,

pune,

pyramid,

tdd,

test_pyramid,

testing,

testing_conference,

thoughtworks,

tta,

visualization

Sunday, June 29, 2014

What reporting / reporters you use with Selenium / WebDriver?

Test execution reports is usually an after-thought when doing automation.

- What reporting techniques have you used on your automation projects (language / tools probably do not matter)?

- What is the test log format used?

- Do you use any special / different plugins, or rely on the CI tools with some plugins?

- What value do you get out of these reports?

Friday, June 27, 2014

The Feedback Tradeoff

If you are a tester doing or involved with Test Automation, or a developer, I hope you are following the exciting debate about Test Driven Development (TDD) and its impact on software design. If you are not, you should!

My summary and takeaways from Part 3 of the series is now out on ThoughtWorks Insights - "The Feedback Tradeoff".

Monday, June 23, 2014

To Deploy or Not to Deploy - decide using Test Trend Analyzer (TTA)

[UPDATE: 18th July 2014] I spoke on the same topic - "To Deploy or Not to Deploy - decide using Test Trend Analyzer (TTA)" at Unicom's World Conference on Next Generation Testing in Bangalore on 18th July 2014. The slides are available here and the video is available here. In this talk, I also gave a demo of TTA.

I spoke in 3 conferences last week about "To Deploy or Not to Deploy - decide using Test Trend Analyzer (TTA)"

You can find the slides here and the videos here:

Here is the abstract of the talk:

The key objectives of organizations is to provide / derive value from the products / services they offer. To achieve this, they need to be able to deliver their offerings in the quickest time possible, and of good quality. To understand the quality / health of their products at a quick glance, typically a team of people scramble to collate and collect the information manually needed to get a sense of quality about the products they support. All this is done manually. So in the fast moving environment, where CI (Continuous Integration) and CD (Continuous Delivery) are now a necessity and not a luxury, how can teams take decisions if the product is ready to be deployed to the next environment or not? Test Automation across all layers of the Test Pyramid is one of the first building blocks to ensure the team gets quick feedback into the health of the product-under-test.

The next set of questions are:

• How can you collate this information in a meaningful fashion to determine - yes, my code is ready to be promoted from one environment to the next?

• How can you know if the product is ready to go 'live'?

• What is the health of you product portfolio at any point in time?

• Can you identify patterns and do quick analysis of the test results to help in root-cause-analysis for issues that have happened over a period of time in making better decisions to better the quality of your product(s)?

The current set of tools are limited and fail to give the holistic picture of quality and health, across the life-cycle of the products.

The solution - TTA - Test Trend Analyzer

TTA is an open source product that becomes the source of information to give you real-time and visual insights into the health of the product portfolio using the Test Automation results, in form of Trends, Comparative Analysis, Failure Analysis and Functional Performance Benchmarking. This allows teams to take decisions on the product deployment to the next level using actual data points, instead of 'gut-feel' based decisions.

I spoke in 3 conferences last week about "To Deploy or Not to Deploy - decide using Test Trend Analyzer (TTA)"

You can find the slides here and the videos here:

Here is the abstract of the talk:

The key objectives of organizations is to provide / derive value from the products / services they offer. To achieve this, they need to be able to deliver their offerings in the quickest time possible, and of good quality. To understand the quality / health of their products at a quick glance, typically a team of people scramble to collate and collect the information manually needed to get a sense of quality about the products they support. All this is done manually. So in the fast moving environment, where CI (Continuous Integration) and CD (Continuous Delivery) are now a necessity and not a luxury, how can teams take decisions if the product is ready to be deployed to the next environment or not? Test Automation across all layers of the Test Pyramid is one of the first building blocks to ensure the team gets quick feedback into the health of the product-under-test.

The next set of questions are:

• How can you collate this information in a meaningful fashion to determine - yes, my code is ready to be promoted from one environment to the next?

• How can you know if the product is ready to go 'live'?

• What is the health of you product portfolio at any point in time?

• Can you identify patterns and do quick analysis of the test results to help in root-cause-analysis for issues that have happened over a period of time in making better decisions to better the quality of your product(s)?

The current set of tools are limited and fail to give the holistic picture of quality and health, across the life-cycle of the products.

The solution - TTA - Test Trend Analyzer

TTA is an open source product that becomes the source of information to give you real-time and visual insights into the health of the product portfolio using the Test Automation results, in form of Trends, Comparative Analysis, Failure Analysis and Functional Performance Benchmarking. This allows teams to take decisions on the product deployment to the next level using actual data points, instead of 'gut-feel' based decisions.

Labels:

#vodqa,

agile,

automation,

automation_framework,

chennai,

collaboration,

conference,

gurgaon,

innovation,

opensource,

performance,

ruby,

testing,

testing_conference,

thoughtworks,

tta,

visualization,

vodQA

Saturday, June 21, 2014

Future of Testing, Test Automation and The Quality Analyst

In this edition of vodQA - "Let your testing do the talking" at ThoughtWorks, Bangalore, I spoke on what I think is the "Future of Testing, Test Automation and the Quality Analyst".

The slides from the talk are available here and the video is available here.

Watch out for updates from other exciting and intriguing talks from this edition of vodQA.

Below are some pictures from my talk.

Join the vodQA group on facebook to keep updated on upcoming vodQA events and happenings.

The slides from the talk are available here and the video is available here.

Watch out for updates from other exciting and intriguing talks from this edition of vodQA.

Below are some pictures from my talk.

Join the vodQA group on facebook to keep updated on upcoming vodQA events and happenings.

Wednesday, June 18, 2014

Anna Royzman speaks on encouraging women in Testing and more ...

The next part of the Disruptive Testing series is out - this time read thought-provoking insights by Anna Royzman on topics like - how to encourage more women in Testing, changing role of a Tester, importance of role models and mentors in the profession, and more.

Tuesday, June 3, 2014

Let your testing do the talking

The next edition of vodQA is here - on 21st June 2014 at ThoughtWorks, Bangalore. The theme of this event is "Let your testing do the talking".

To attend, you can register here.

See you on 21st June.

To attend, you can register here.

See you on 21st June.

Monday, June 2, 2014

Is TDD Dead?

If you are a tester doing or involved with Test Automation, or a developer, I hope you are following the exciting debate about Test Driven Development (TDD) and its impact on software design. If you are not, you should!

Following Part 2 of the series "Test-induced design damage" - I wrote a blog which is published on ThoughtWorks Insights - "Test-Induced Design Damage. Fallacy or Reality?"

[UPDATE]: My summary and takeaways from Part 3 of the series is now out on ThoughtWorks Insights - "The Feedback Tradeoff".

Following Part 2 of the series "Test-induced design damage" - I wrote a blog which is published on ThoughtWorks Insights - "Test-Induced Design Damage. Fallacy or Reality?"

[UPDATE]: My summary and takeaways from Part 3 of the series is now out on ThoughtWorks Insights - "The Feedback Tradeoff".

Tuesday, May 20, 2014

Disruptive Testing - An interview with Justin Rohrman

See how heuristics, domain knowledge and music are related to Software Testing in my next interview with Justin Rohrman available on ThoughtWorks Insights.

Monday, May 19, 2014

WAAT at StarEast2014

I was speaking about "Build the 'right' regression suite using Behavior Driven Testing (BDT)" at StarEast 2014 and met Marcus Merrell who was speaking about "Automated Analytics Testing with Open Source Tools". I figured out deep into our conversation that he has used WAAT, and a few others at the table also were aware of and had used WAAT before. Felt great!

Thursday, May 15, 2014

Update from StarEast 2014 - "Build the 'right' regression suite using BDT"

I had a great time speaking about how to "Build the 'right' regression suite using Behavior Driven Testing (BDT)".

This time, I truly understood the value of practice, dry-runs. Before the webinar for NY Selenium Meetup group, I presented the content to my colleagues in the ThoughtWorks, Pune office and also shared the same with the hivemind @ ThoughtWorks and got great feedback. That helped me tremendously in making my content more rock-solid and well-tuned.

As a result, I was able to deliver the content, to a very enthusiastic and curious audience, who turned up in great numbers (>105) to hear about what the $@$#^$% is BDT, and how can it help in avoiding the nightmare of long, unfruitful, painful Regression Test cycles.

The slides from the talk are available here and the video is available here.

This time, I truly understood the value of practice, dry-runs. Before the webinar for NY Selenium Meetup group, I presented the content to my colleagues in the ThoughtWorks, Pune office and also shared the same with the hivemind @ ThoughtWorks and got great feedback. That helped me tremendously in making my content more rock-solid and well-tuned.

As a result, I was able to deliver the content, to a very enthusiastic and curious audience, who turned up in great numbers (>105) to hear about what the $@$#^$% is BDT, and how can it help in avoiding the nightmare of long, unfruitful, painful Regression Test cycles.

The slides from the talk are available here and the video is available here.

Thursday, May 8, 2014

Update from Webinar on "Build the 'right' regression suite using BDT" for NY Selenium Meetup

I had a challenging, yet good time speaking in a Webinar for the New York Selenium Meetup community on how to "Build the 'right' regression suite using Behavior Driven Testing (BDT)". This webinar was conducted on 6th May 2014 at 6.30pm and I am very thankful to Mona Soni to help organize the same.

Before I speak about the challenges, here are the slides and the audio + screen recording from the webinar. The video is not cleaned-up ... I had started recording the session and then we did wait for a few minutes before we started off, but you can forward to around the 01:15 min mark and audio starts from that point.

This was challenging because of 2 main reasons:

> With a webinar, I find it difficult to connect with the audience. I am not able to gauge if the content is something they already know about, so I can proceed faster. Or, if they are not following, I need to go slower. Or, the topic is just not interesting enough to them. There may be other reasons as well, but I just do not get that real-time feedback which is so important when explaining a concept and a technique.

Though there were some good interactions and great questions in form of chat, I miss that eye-to-eye connect. This webinar was conducted using GoToMeeting. Maybe next time I do this, I need to try to get webcams enabled for atleast a good few people attending to understand that body language.

> The 2nd challenge I had was purely my own body not being able to adjust well enough. I had flown in from India to Florida to speak in STAREAST 2014 conference just a couple of days ago, and was still adjusting to the jet-lag. Evening times turned out to be my lowest-energy points on the day and I felt myself struggling to keep focus, talk and respond effectively. I would like to apologize to the attendees if they felt my content delivery was not up to the mark for this reason.

I appreciate any feedback on the session, and looking forward to connect with you and talk about Testing, Test Automation, my open-source tools (TaaS, WAAT, TTA) and of course BDT!

Before I speak about the challenges, here are the slides and the audio + screen recording from the webinar. The video is not cleaned-up ... I had started recording the session and then we did wait for a few minutes before we started off, but you can forward to around the 01:15 min mark and audio starts from that point.

This was challenging because of 2 main reasons:

> With a webinar, I find it difficult to connect with the audience. I am not able to gauge if the content is something they already know about, so I can proceed faster. Or, if they are not following, I need to go slower. Or, the topic is just not interesting enough to them. There may be other reasons as well, but I just do not get that real-time feedback which is so important when explaining a concept and a technique.

Though there were some good interactions and great questions in form of chat, I miss that eye-to-eye connect. This webinar was conducted using GoToMeeting. Maybe next time I do this, I need to try to get webcams enabled for atleast a good few people attending to understand that body language.

> The 2nd challenge I had was purely my own body not being able to adjust well enough. I had flown in from India to Florida to speak in STAREAST 2014 conference just a couple of days ago, and was still adjusting to the jet-lag. Evening times turned out to be my lowest-energy points on the day and I felt myself struggling to keep focus, talk and respond effectively. I would like to apologize to the attendees if they felt my content delivery was not up to the mark for this reason.

I appreciate any feedback on the session, and looking forward to connect with you and talk about Testing, Test Automation, my open-source tools (TaaS, WAAT, TTA) and of course BDT!

Labels:

agile,

automation,

automation_framework,

bdt,

collaboration,

conference,

cucumber,

innovation,

meetup,

opensource,

punedashboard,

selenium,

stareast,

testing,

testing_conference,

thoughtworks

Wednesday, April 30, 2014

TaaS blog post featured on ThoughtWorks Insights

My presentation on "Automate Your Tests across Platform, OS, Technologies with TaaS" is features on ThoughtWorks Insights page.

Monday, April 21, 2014

What are the criteria for determining success for Test Automation?

Test Automation is often thought of as a silver bullet that will solve the Teams testing problems. As a result there is a heavy investment of time, money, people in building an Automated Suite of Tests of different types which will solve all problems.

Is that really the case? We know the theoretical aspect of what is required to make Test Automation successful for the team. I want to know from practical perspective, with the context what worked or not for you.

I am currently writing about "Building an Enterprise-class Test Automation Framework", and am gathering experience reports based on what others in the industry have seen.

I am looking for people to share stories from their current / past experiences of full or limited success of Test Automation, answering the below questions (at a minimum):

Is that really the case? We know the theoretical aspect of what is required to make Test Automation successful for the team. I want to know from practical perspective, with the context what worked or not for you.

I am currently writing about "Building an Enterprise-class Test Automation Framework", and am gathering experience reports based on what others in the industry have seen.

I am looking for people to share stories from their current / past experiences of full or limited success of Test Automation, answering the below questions (at a minimum):

Context:

- What is the product under test like? (small / med / large / enterprise) (web / desktop / mobile / etc.)

- How long is the the Test Automation framework envisioned to be used? (few months, a year or two, more than a few years, etc.)

- What is the team (complete and test automation) size?

- Is the testing team co-located or distributed?

- What are the tools / technologies used for testing?

- Are the skills and capabilities uniform for the team members?

- Is domain a factor for determining success criteria?

Framework related:

- What are the factors determining the success / failure of Test Automation implementation?

- What worked for you?

- What did not work as well?

- What could have been different in the above to make Test Automation a success story?

- What are the enablers in your opinion to make Test Automation successful?

- What are the blockers / anchors in your opinion that prevented Test Automation from being successful?

- Does it matter if the team is working in Waterfall methodology or Agile methodology?

Thursday, April 10, 2014

Sample test automation framework using cucumber-jvm

I wanted to learn and experiment with cucumber-jvm. My approach was to think of a real **complex scenario that needs to be automated and then build a cucumber-jvm based framework to achieve the following goals:

So, without further ado, I introduce to you the cucumber-jvm-sample Test Automation Framework, hosted on github.

Feel free to fork and use this framework on your projects. If there are any other features you think are important to have in a Test Automation Framework, let me know. Even better would be to submit pull requests with those changes, which I will take a look at and accept if it makes sense.

** Pun intended :) The complex test I am talking about is a simple search using google search.

- Learn how cucumber-jvm works

- Create a bare-bone framework with all basic requirements that can be reused

So, without further ado, I introduce to you the cucumber-jvm-sample Test Automation Framework, hosted on github.

Following functionality is implemented in this framework:

- Tests specified using cucumber-jvm

- Build tool: Gradle

- Programming language: Groovy (for Gradle) and Java

- Test Data Management: Samples to use data-specified in feature files, AND use data from separate json files

- Browser automation: Using WebDriver for browser interaction

- Web Service automation: Using cxf library to generate client code from web service WSDL files, and invoke methods on the same

- Take screenshots on demand and save on disk

- Integrated cucumber-reports to get 'pretty' and 'meaningful' reports from test execution

- Using apache logger for storing test logs in files (and also report to console)

- Using aspectJ to do byte code injection to automatically log test trace to file. Also creating a separate benchmarks file to track time taken by each method. This information can be mapped separately in other tools like Excel to identify patterns of test execution.

Feel free to fork and use this framework on your projects. If there are any other features you think are important to have in a Test Automation Framework, let me know. Even better would be to submit pull requests with those changes, which I will take a look at and accept if it makes sense.

** Pun intended :) The complex test I am talking about is a simple search using google search.

Tuesday, April 8, 2014

Disruptive Testing - An interview with Matt Heusser

Read an interview with Matt Heusser to know what he thinks about Lean Software Testing, Scaling Agile, Testing Metrics, and other thought provoking questions.

Friday, March 28, 2014

WAAT Java v1.5.1 released today

After a long time, and with lot of push from collaborators and users of WAAT, I have finally updated WAAT (Java) and made a new release today.

You can get this new version - v1.5.1 directly from the project's dist directory.

Once I get some feedback, I will also update WAAT-ruby with these changes.

Here is the list of changes in WAAT_v1.5.1:

Thanks.

You can get this new version - v1.5.1 directly from the project's dist directory.

Once I get some feedback, I will also update WAAT-ruby with these changes.

Here is the list of changes in WAAT_v1.5.1:

Changes in v1.5.1

-

Engine.isExpectedTagPresentInActualTagList in engine class is made public

-

Updated Engine to work without creating testData.xml file, and directly

sending exceptedSectionList for tags

Added a new method Engine.verifyWebAnalyticsData(String actionName, ArrayListexpectedSectionList, String[] urlPatterns, int minimumNumberOfPackets) -

Added an empty constructor for Section.java to prevent marshalling error

-

Support Fragmented Packets

-

Updated Engine to support Pattern comparison, instead of String contains

Thanks.

Thursday, February 27, 2014

Automate across Platform, OS, Technologies with TaaS

[Updated - link to slides, audio, experience report added]

The talk at Agile India 2014 went really well. A few things happened:

The slides from the talk are available here on slideshare. You can download the audio recording from the talk from here. The pictures and video should be available from Agile India 2014 site soon. I will update the links for the same when that becomes available.

--------

After what seems to be a long time, I am speaking in Agile India 2014 in Bangalore on "Automate across Platform, OS, Technologies with TaaS".

I am changing the format of the talk this time and am hoping to do a good demo instead of just showing code snippets. Hopefully no unpleasant surprises crop up in the demo!!

Other than that, really looking forward to interacting with a lot of fellow-enthusiasts at the conference.

Slides and experience report to follow soon.

The talk at Agile India 2014 went really well. A few things happened:

- My talk was the 2nd last talk on a Saturday. My hopes of having a decent sized audience was low. But I was very pleasantly surprised to see the room almost full.

- Usually in conferences I have spoken at, the ratio of technical / hands-on people Vs leads / managers is around 20:80. In this case, that ratio was almost inverted. There were about 70-80% technical / hands-on people in the audience.

- Due to the higher technical audience, there were great questions all along the way - which resulted in me not able to complete on time ... I actually went over by 5-6 minutes and that too had to really rush through the last few sections of my presentation, and was not able to do a complete demo.

- Almost everyone was able to relate to the challenges of integration test automation, the concept and the problem TaaS solves - which was a great validation for me!

- Unfortunately I had to rush to the airport immediately after the talk, which prevented me from networking and talking more specifics with folks after the talk. Hopefully some of them will be contacting me to know more about TaaS!

The slides from the talk are available here on slideshare. You can download the audio recording from the talk from here. The pictures and video should be available from Agile India 2014 site soon. I will update the links for the same when that becomes available.

--------

After what seems to be a long time, I am speaking in Agile India 2014 in Bangalore on "Automate across Platform, OS, Technologies with TaaS".

I am changing the format of the talk this time and am hoping to do a good demo instead of just showing code snippets. Hopefully no unpleasant surprises crop up in the demo!!

Other than that, really looking forward to interacting with a lot of fellow-enthusiasts at the conference.

Slides and experience report to follow soon.

Friday, November 22, 2013

Oraganising vodQA

My blog on "Organizing a successful meetup - Tips from vodQA" is now available from ThoughtWorks Insights page.

Monday, October 21, 2013

BDT in Colombo Selenium Meetup

[UPDATED again] Feedback and pictures from the virtual session on BDT

[UPDATED]

The slides and audio + slide recording have now been uploaded.

I will be talking virtually and remotely about "Building the 'right' regression suite using Behavior Driven Testing (BDT)" in Colombo's Selenium Meetup on Wednesday, 23rd October 2013 at 6pm IST.

If you are interested in joining virtually, let me know, and if possible, I will get you a virtual seat in the meetup.

[UPDATED]

The slides and audio + slide recording have now been uploaded.

I will be talking virtually and remotely about "Building the 'right' regression suite using Behavior Driven Testing (BDT)" in Colombo's Selenium Meetup on Wednesday, 23rd October 2013 at 6pm IST.

If you are interested in joining virtually, let me know, and if possible, I will get you a virtual seat in the meetup.

Saturday, October 12, 2013

vodQA Pune - Faster | Smarter | Reliable schedule announced

A very impressive and engrossing schedule for vodQA Pune scheduled for Saturday, 19th October 2013 at ThoughtWorks, Pune has now been announced. See the event page for more details.

I am going to be talking about "Real-time Trend and Failure Analysis using Test Trend Analyzer (TTA)"

I am going to be talking about "Real-time Trend and Failure Analysis using Test Trend Analyzer (TTA)"

Sunday, October 6, 2013

Offshore Testing on Agile Projects

Offshore Testing on Agile Projects …

Anand Bagmar

Reality of organizations

Organizations

are now spread across the world. With this spread, having distributed teams is

a reality. Reasons could be a combination of various factors, including:

|

Globalization

|

Cost

|

|

24x7

availability

|

Team size

|

|

Mergers

and Acquisitions

|

Talent

|

The Agile Software

methodology talks about various principles to approach Software Development. There

are various practices that can be applied to achieve these principles.

The choice

of practices is very significant and important in ensuring the success of the

project. Some of the parameters to consider, in no significant order are:

|

Skillset on the team

|

Capability on the team

|

|

Delivery objectives

|

Distributed

teams

|

|

Working with partners / vendors?

|

Organization Security / policy constrains

|

|

Tools for collaboration

|

Time overlap

time between teams

|

|

Mindset of team members

|

Communication

|

|

Test Automation

|

Project

Collaboration Tools

|

|

Testing Tools

|

Continuous Integration

|

** The above

list is from a Software Testing perspective.

This post is

about what practices we implemented as a team for an offshore testing project.

Case Study - A quick introduction

An enterprise had a B2B product providing an

online version of a physically conducted auction for selling used-vehicles, in

real-time and at high-speed. Typical participation in this auction is by an auctioneer,

multiple sellers, and potentially hundreds of buyers. Each sale can have up to

500 vehicles. Each vehicle gets sold / skipped in under 30 seconds - with multiple

buyers potentially bidding on it at the same time. Key business rules: only 1

bid per buyer, no consecutive bids by the same buyer.

Analysis and Development was happening across 3

locations – 2 teams in the US, and 1 team in Brazil. Only Testing was happening from Pune, India.

George Bernard Shaw said:

“Success does not consist in never making

mistakes but in never making the same one a second time.”

We took that to heart and very sincerely. We

applied all our learning and experiences in picking up the practices to make us

succeed. We consciously sought to creative, innovative and applied

out-of-the-box thinking on how we approached testing (in terms of strategy,

process, tools, techniques) for this unique, interesting and extremely

challenging application, ensuring we do not go down the same path again.

Challenges

We

had to over come many challenges for this project.

- Challenge in creating a common DSL that will be understood by ALL parties - i.e. Clients / Business / BAs / PMs / Devs / QAs

- All examples / forums talk using trivial problems - whereas we had lot of data and complex business scenarios to take care of.

- Cucumber / capybara / WebDriver / ruby do not allow an easy way to do concurrency / parallel testing

- We needed to simulate in our manual + automation tests for "n" participants at a time, interacting with the sale / auction

- A typical sale / auction can contains 60-500 buyers, 1-x sellers, 1 auctioneer. The sale / auction can contain anywhere from 50-1000 vehicles to sell. There can be multiple sales going on in parallel. So how do we test these scenarios effectively?

- Data creating / usage is a huge problem (ex: production subset snapshot is > 10GB (compressed) in size, refresh takes long time too,

- Getting a local environment in Pune to continue working effectively - all pairing stations / environment machines use RHEL Server 6.0 and are auto-configured using puppet. These machines are registered to the Client account on the RedHat Satellite Server.

- Communication challenge - We are working from 10K miles away - with a time difference of 9.5 / 10.5 hours (depending on DST) - this means almost 0 overlap with the distributed team. To add to that complexity, our BA was in another city in the US - so another time difference to take care of.

- End-to-end Performance / Load testing is not even a part of this scope - but something we are very vary of in terms of what can go wrong at that scale.

- We need to be agile - i.e. testing stories and functionality in the same iteration.

All the above-mentioned problems meant we had to come up with our

own unique way of tackling the testing.

Our principles - our North Star

We

stuck to a few guiding principles as our North Star:

- Keep it simple

- We know the goal, so evolve the framework - don't start building everything from step 1

- Keep sharing the approach / strategy / issues faced on regular basis with all concerned parties and make this a TEAM challenge instead of a Test team problem!

- Don't try to automate everything

- Keep test code clean

The End Result

At the end of the journey, here are some interesting

events from the off-shore testing project:

- Tests were specified in form of user journeys following the Behavior Driven Testing (BDT) philosophy – specified in Cucumber.

- Created a custom test framework (Cucumber, Capybara, WebDriver) that tests a real-time auction - in a very deterministic fashion.

- We had 65-70 tests in form of user journeys that covers the full automated regression for the product.

- Our regression completed in less than 30 minutes.

- We had no manual tests to be executed as part of regression.

- All tests (=user journeys) are documented directly in Cucumber scenarios and are automated

- Anything that is not part of the user journeys is pushed down to the dev team to automate (or we try to write automation at that lower level)

- Created a ‘special’ Long running test suite that simulates a real sale with 400 vehicles, >100 buyers, 2 sellers and an auctioneer.

- Created special concurrent (high speed parallel) tests that ensures even at highest possible load, the system is behaving correctly

- Since there was no separate performance and load test strategy, created special utilities in the automation framework, to benchmark “key” actions.

- No separate documentation or test cases ever written / maintained - never missed it too.

- A separate special sanity test that runs in production after deployment is done, to ensure all the integration points are setup properly

- Changed our work timings (for most team members) from 12pm - 9pm IST to get some more overlap, and remote pairing time with onsite team.

- Setup an ice-cream meter - for those that come late for standup.

Innovations and Customizations

Necessity

breeds innovation! This was so true in this project.

Below is a table listing all the different areas and specifics of

the customization we did in our framework.

Dartboard

Created a custom board “Dartboard” to quickly visualize

the testing status in the Iteration. See this post for more details: “Dartboard

– Are you on track?”

TaaS

To

automate the last mile of Integration Testing between different applications, we

created an open-source product – TaaS. This provides a platform / OS

/ Tool / Technology / Language agnostic way of Automating the Integrated

Tests between applications.

Base premise for TaaS:

Enterprise-sized organizations have multiple

products under their belt. The technology stack used for each of the product is

usually different – for various reasons.

Most of such organizations like to have a

common Test Automation solution across these products in an effort to

standardize the test automation framework.

However, this is not a good idea! If products in the same

organization can be built using different / varied technology stack, then why

should you pose this restriction on the Test Automation environment?

Each product should be tested using the tools

and technologies that are “right” for it.

“TaaS” is a product that allows you do

achieve the “correct” way of doing Test Automation.

WAAT - Web Analytics Automation Testing Framework

I had created the WAAT framework

for Java and Ruby in 2010/2011.

However this framework had a limitation - it did not work products what are

configured to work only in https mode.

For one of the applications, we need to do testing for WebTrends

reporting. Since this application worked only in https mode, I created a new

plugin for WAAT - JS Sniffer that can

work with https-only applications. See my blog for

more details about WAAT.

Subscribe to:

Comments (Atom)